A brief history of electricity and electricity grids

Much of the way in which the electricity system operates is driven by the physical characteristics of electricity. Ever since Frenchman Hippolyte Pixii built the first dynamo in 1832, people have been trying to both exploit and mitigate the phenomenon. In fact, entirely by accident, Pixxi developed the first alternator – his model created pulses of electricity separated by no current – but as he had no idea what to do with the changing current, he concentrated on eliminating it, converting alternating current to direct current using a commutator.

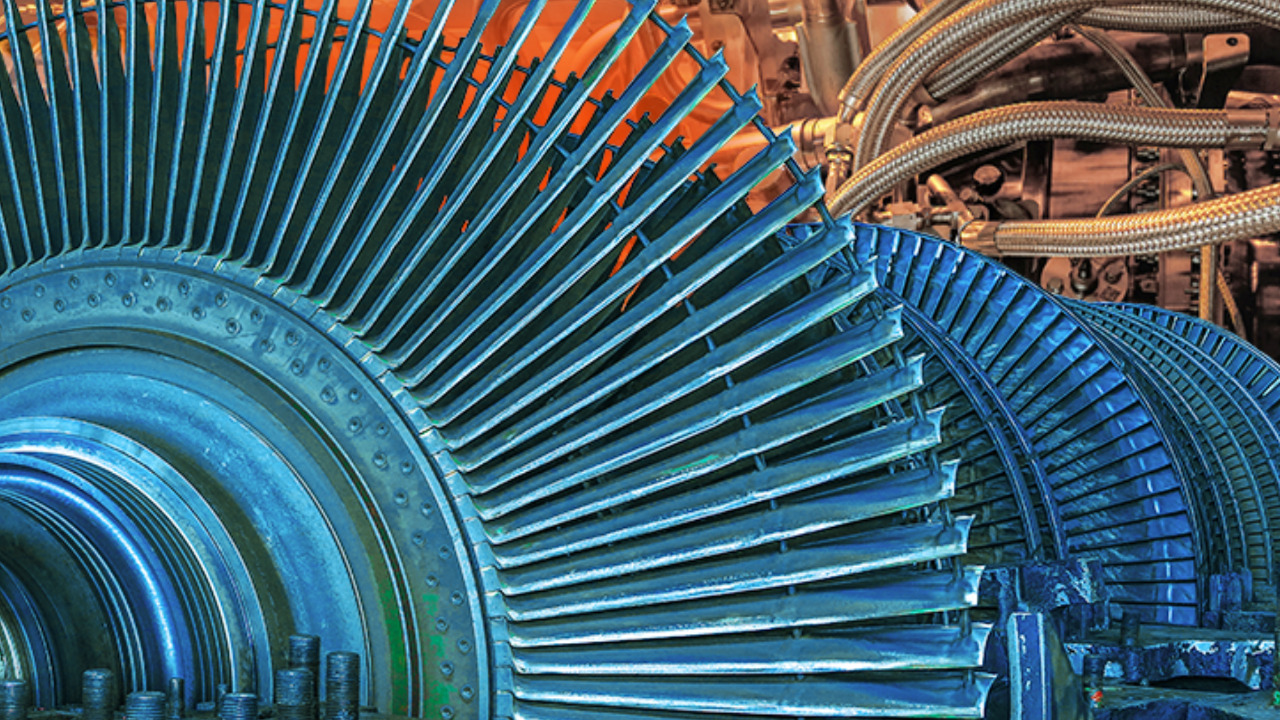

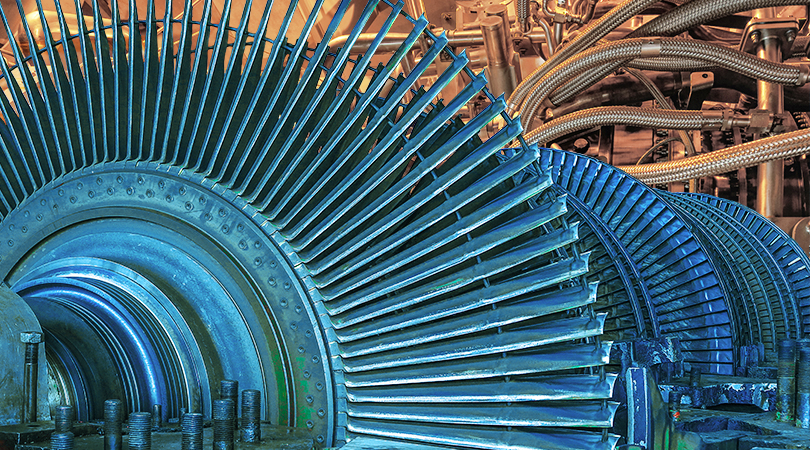

In the most basic sense, a generator is one magnet rotating while inside the influence of another magnet’s magnetic field. The generator consists of stationary magnets – the stator – which create a powerful magnetic field; and a rotating magnet – the rotor – which distorts and cuts through the magnetic lines of flux of the stator, generating electricity. Due to the rotation of the magnet, the direction of the current periodically reverses – this is known as alternating current and is the basic output of a generator. For this reason, generators are also known as alternators.

This is illustrated here. A bent wire is placed between the poles of a magnet and set to rotate. As the loop of wire turns, each side will sometimes be travelling up and sometimes down – when it moves up, the current moves in one direction, and when it moves down the current moves the in the other direction.

This is illustrated here. A bent wire is placed between the poles of a magnet and set to rotate. As the loop of wire turns, each side will sometimes be travelling up and sometimes down – when it moves up, the current moves in one direction, and when it moves down the current moves the in the other direction.

When the loop is perpendicular to the lines of magnetic flux, the current is zero, while when it is parallel to the lines of flux it is at a maximum. This is the origin of the sine waveform of the current and voltage.

An ac generator can be modified to generate direct current by replacing the metal rings in the ac generator with a commutator which consists of a hollow metallic cylinder split into two halves that are insulated from each other.

Two brushes are in contact with each part of the cylinder at the moment when the coil is perpendicular to the magnetic field. During the first half of the cycle, one brush touches one half of the cylinder and the other touches the other half. As the current reverses direction, the brushes swap to touch the other half of the cylinder – this reverses the external direction of the current so it is the same as in the first half of the cycle. The result is known as a dynamo.

This little piece of physics is at the heart of the electricity grids we have today.

In September 1882, Thomas Edison opened the Pearl Street Power Station in Manhatten. This plant, fired by coal, began with six dynamos, and had an initial load of 400 lamps at 82 customers. By 1884 it was serving 508 customers with 10,164 lamps. This was also the world’s first co-generation plant, combining the provision of heating as well as power with the waste steam being piped to nearby buildings. Unfortunately, the station burned down in 1890.

The reason Edison favoured direct current was that he wanted to power electric motors as well as lights, and at the time there were no good ac motors available. However, direct current was limited because it could not be transported over long distances because too much of the energy would be lost through the wires as heat. This meant that power stations needed to be located close to consumers, and while it worked to a certain extent in cities, it was less feasible elsewhere.

Alternating current on the other hand could be transported over longer distances since the voltage could be increased to a level that reduced the heating effect. That is done through the use of transformers: a “step-up” transformer increases the voltage of the electricity at the power station, and a “step-down” transformer reduces it again for distribution into homes and businesses.

In 1883 Nikola Tesla invented the first transformer, known as the “Tesla coil”. The following year, Tesla went on to invent the first electric alternator for the production of alternating current. In 1887 he patented a complete electrical system, including a generator, transformers, a transmission system, a motor for use in appliances, and lights. The scheme caused a sensation and the patents were bought by George Westinghouse.

What followed has come to be known as the War or Battle of the Currents. On the one side were Tesla and Westinghouse and on the other, Edison. The stakes were high, with the opportunity to secure the rights to electrify American cities and earn potentially huge patent royalties.

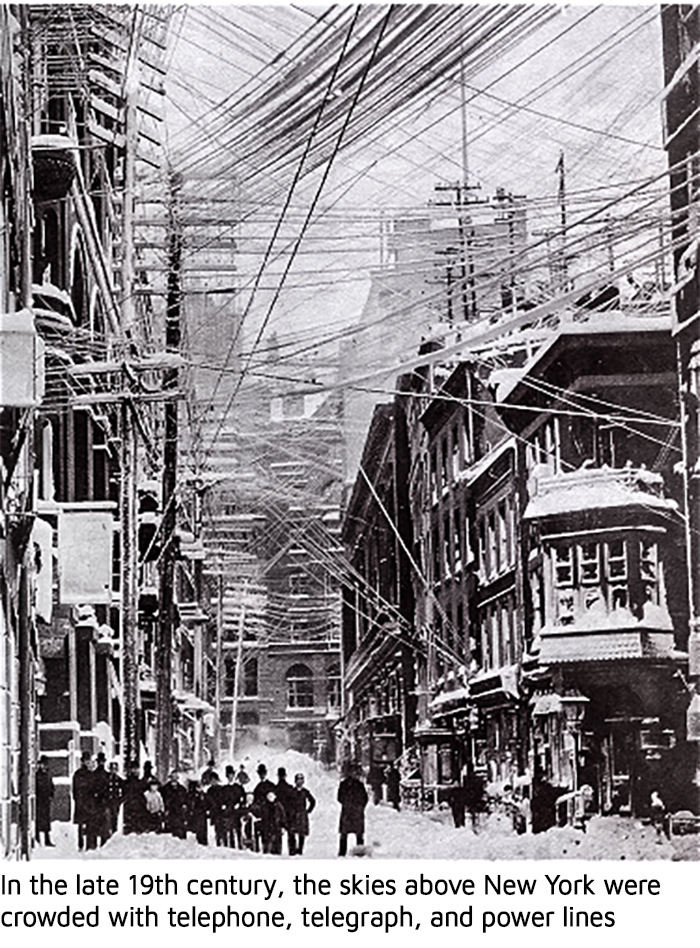

The use of ac spread rapidly, leading Edison to begin a mis-information campaign against the technology. He claimed that the high voltages used to transport alternating current were hazardous, and that the design was inferior to, and infringed on the patents behind, his direct current system. The spring of 1888 saw a media outcry over fatalities caused by pole-mounted high-voltage ac lines, attributed to the greed and callousness of the arc lighting companies that used them.

Harold P. Brown, a New York electrical engineer, claimed that alternating current was more dangerous than direct current and tried to prove this by publicly killing animals with both currents, with technical assistance from Edison. They also contracted with Westinghouse’s main ac rival, the Thomson-Houston Electric Company, to make sure the first electric chair was powered by a Westinghouse ac generator.

Harold P. Brown, a New York electrical engineer, claimed that alternating current was more dangerous than direct current and tried to prove this by publicly killing animals with both currents, with technical assistance from Edison. They also contracted with Westinghouse’s main ac rival, the Thomson-Houston Electric Company, to make sure the first electric chair was powered by a Westinghouse ac generator.

The dirty tricks continued until the World Fair in Chicago in 1893, which was lit with an ac system and the competition between the two was effectively over. Modern electricity systems are almost all run on alternating current.

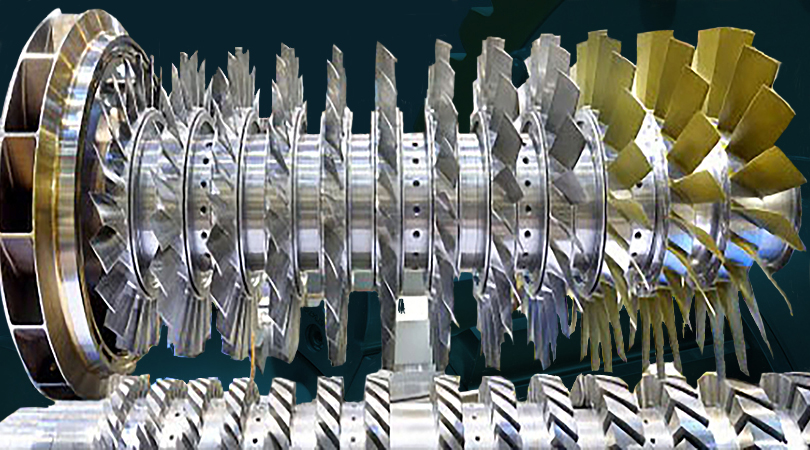

Over the next few decades, countries began to develop electricity grids, linking together sources of generation and end users. People began to install electric lights and other appliances in their homes. The first successful gas turbine was built in France in 1903, followed in 1909 by the first pumped hydro facility in Switzerland.

The early 20th century also saw the first electric vacuum cleaners and washing machines, with air conditioning being pioneered in 1911 and electric refrigerators in 1913. When televisions were invented in the 1920, the stage was set for today’s electricity demand patterns.

In 1920 just 6% of British homes had electricity, and those that did had widely varying services – in London alone there were 24 voltages and 10 different frequencies. In 1921, there were more than 480 authorised suppliers of electricity operating in the UK, generating and supplying electricity at a variety of voltages and frequencies. Other countries were more advanced, and had cheaper electricity, as the industry was owned and developed by the state.

To minimise this chaos, the Electricity Act of 1926 created a central authority to promote a national transmission system. This grid had a voltage of 132 kV and was the largest peacetime infrastructure project the country had ever seen. It was essentially completed by the mid-1930s, and consisted of 4,000 miles of cable installed by 100,000 men and a great many horses. Overhead cables were used due their cost advantages over buried cables, and were supported by pylons – the 1920s Reginald Blomfield design in large part survives today. The first pylon was erected near Edinburgh in 1928.

The 132 kV grid was originally intended to be operated as a number of independent regional grids. Each could be connected to a neighbouring grid if required, but typically they were operated separately. The preparations for the Second World War included the construction of a bomb-proof national grid control centre in London. In late 1938, and with some trepidation, the inter-regional isolating switches were closed and the regional grids were all connected. As nothing untoward happened a decision was made in spring 1939 to keep them closed, except in the case of an emergency.

From there, the British grid, and those in other developed nations, progressed along similar lines: there were large, power stations delivering ac electricity onto a high voltage network connected to lower voltage distribution networks delivering electricity to end users in a consistent and standardised way. Ownership structures differed across countries, as did the primary sources of generation: most countries relied on coal and oil, and later gas as a fuel, while nuclear power was adopted to varying degrees in different markets, and countries developed technologies such as hydro-electric and geothermal power where the conditions were suitable.

This basic model persisted across the developed world until the late 20th century when concerns over climate change and the effects of carbon dioxide began to drive a change in approach.

What we mean by the “energy transition”

The term “energy transition” is broadly understood to describe the desire of countries to move from the fossil-fuel dominated model described above to a low or zero carbon energy system based on renewable generation.

Great Britain’s net zero journey began with its Kyoto Protocol commitments to secure a 12.5% reduction in greenhouse gas emissions from 1990 levels between 2008 and 2012. A more ambitious target of a 20% reduction in CO2 by 2010 was subsequently established by the UK Government, initially supported by an energy tax linked to carbon emissions associated with different fuels (the Climate Change Levy, introduced in 2001), and a subsidy scheme for large-scale renewable generation (the Renewables Obligation, introduced in 2002).

A more wide-reaching approach was implemented in the Electricity Market Reform (“EMR”), launched in 2011. As a result, the UK’s carbon emissions fell by 44% between 1990 and 2019, and renewable generation capacity increased from 3.1% of the total in 2000 to 29.5% in 2020 with the amount of electricity generated from renewable sources increasing from 2.8% to 43.1% over the same period.

The British market is now at a key point in its transition. Penetration of renewable generation has grown to a degree that the design and operation of electricity networks needs to evolve, while at the same time, legally binding net-zero targets are driving the decarbonisation of heating and transport. The intermittency associated with renewable generation did not present much of a problem when there was very little such capacity on the system, but now the amount of intermittent generation has reached a level where both the practical challenges and costs have become significant.

A significant investment in back-up capacity is required, but the economics of that back-up capacity are seriously impaired by low utilisation rates and low wholesale prices when wind levels in particular are high. Since there are times when intermittent renewable generation is producing close to zero electricity (for example in the winter, the sun sets before the evening peak, and anti-cyclonic weather systems result in still conditions), a significant amount of back-up capacity is required. This capacity must be paid for.

None of this is unique to the British system – similar challenges are seen around the world as countries seek to de-carbonise their electricity markets, and their economies more broadly. The specific technology choices and market mechanisms vary around the world, but the fundamental challenges of transitioning from conventional grids that relied heavily on fossil-fuel based generation to ones with a significant component of intermittent renewable generation are the same in all major markets.

How the energy transition impacts electricity grids

The energy transition has seen a major deployment of intermittent renewable generation across the world. In general, wind and solar energy is converted into direct current…although wind turbines rotate, they do not do so at a constant rate, so are unable to generate ac electricity with a stable waveform without the use of power electronics. Clearly there are no rotational elements to solar power.

In the developed world, electricity grids and all the connected infrastructure from machinery in heavy industry to the devices in our homes, are all built on the fundamental principle that the power delivered to the socket has a consistent, predictable set of characteristics. Those characteristics may differ slightly from country to country – hence the need to adaptors when using devices in another country – but the fundamental concept is the same: alternating current at either 50 Hz or 60 Hz (depending on the country) with that frequency being delivered within a very narrow tolerance band. Outside that band, equipment can fail since it is designed to operate within a narrow frequency range.

Not only do intermittent renewables not deliver stable ac current, they also tend to be “distributed”, particularly in the case of solar. This means instead of grids based around large units of generation connected to high voltage networks we have many smaller generation sources connected to distribution networks, and even “behind-the-meter” ie not technically on the grid at all.

This presents both physical and economic challenges. Physical challenges relate to how the stable electricity expected by end users can be maintained when the means of generation no longer supports that to the same degree, and economic since the growth in self-generation challenges the economic assumptions behind the way in which networks are built and paid for.

Grid operators are having to develop new ways of managing their networks, creating new markets for ancillary services such as fast and dynamic frequency response, reserve and reactive power products, short circuit levels and inertia support. New technologies such as chemical batteries are being used for some of these services, and old technologies such as synchronous condensers are being used in new ways to support changing grid needs. As a leading provider of critical components for the provision of reactive power and mechanical inertia, SSS Clutch is at the forefront of these developments.